Scaling containers is more than just slapping a proxy in front of a service and walking away. There’s more to scale than just distribution, and in the fast-paced world of containers there are five distinct capabilities required to ensure scale: retries, circuit breakers, discovery, distribution, and monitoring.

In this post on the art of scaling containers, we’ll dig into distribution.

Distribution

The secret sauce of scaling anything has always relied, in part, on how requests are distributed across a finite set of resources. Not even cloud and its seemingly infinite supply of compute changes that recipe. At the moment a request is received, the possible list of resources which can accept that request and process it is finite. Period.

The decision, then, on where to direct a request becomes fairly important. Send it to the wrong resource, and performance may suffer. Send it to the right resource, and the consumer/employee will be delighted with the result.

In the early days of scale, those decisions were solely based on algorithms. Round robin. Least connections. Fastest response. These stalwart mechanisms still exist today, but have slowly and surely become just another factor in the decision making process rather than the only factor.

That’s because we no longer rely solely on connection-based decision making processes (a.k.a ‘plain old load balancing’). When load balancing was primarily about managing TCP connections, distribution schemes based on connections made sense. But scale is now as much based on architecture as it algorithms, and distribution of requests can be a complex calculation involving many variables above (literally) and beyond layer 4 protocols.

Complicating distribution is the reality that today’s architectures are increasingly distributed themselves. Not just across clouds, but across containers, too. Multiple versions of the same app (or API) might be in service at the same time. The system responsible for distributing the request must be aware and able to distinguish between them and ensure delivery to the right endpoint.

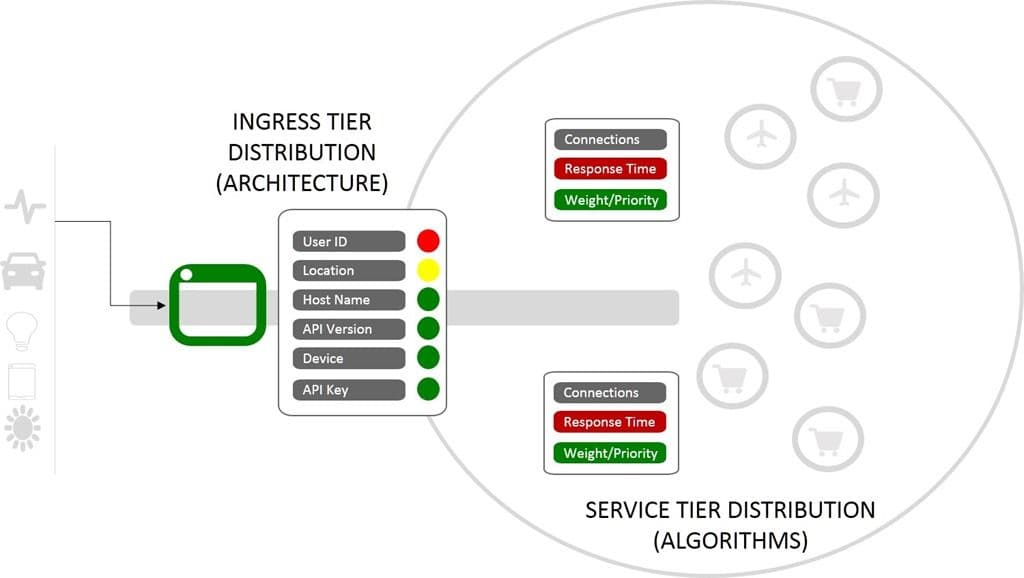

Today, decisions are made based not only on connection capacity, but increasingly on layer 7 (HTTP) parameters. Host names. API methods (aka, the URI). API keys. Custom HTTP headers with version numbers embedded. Location. Device. User. Requests are evaluated in the context they are made and decisions made in microseconds.

Distribution today requires a tiered, architectural approach. The deeper in the app architecture you go, the less granular you get. By the time a proxy is making a load balancing decision between X clones of the same microservice, it may well be using nothing more than traditional algorithmic-driven equations. Meanwhile, at the outer edges of the environment, ingress controllers make sometimes complex decisions based on HTTP layer variables.

On the outside, distribution is driven by architecture. On the inside, by algorithms.

Both are critical components – the most critical, perhaps – of scaling containers.

Both rely on accurate, real-time information on the status (health) of the resources it might distribute requests to. We'll get to monitoring in the next few posts in this series.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.