Colloquialisms. These are those words and phrases that have a uniquely local meaning that can confound those not native to the area. For example, when I’m out and about and thirsty, I look for a bubbler. You probably (guessing you are not a 'Sconnie) look for a water fountain. In Wisconsin, we measure distances by time, not miles. Traffic lights are “stop n' go” lights. Cause, that’s what you do.

My husband’s favorite (he’s not a native) to laugh at is “C’mere once.” I won’t try to explain it beyond it makes sense to me, and to the kids I use it on. And we inherently understand that “Up North” is not a direction, but a place whose location may be specific to the speaker but carries a connotation common to every Wisconsin native describing “that place we go to escape everything.”

You probably have your own list, having grown up somewhere else. But this is my blog, so I get to use mine.

The point today is not, however, a lesson in semantics per se, but more about its applicability to a more localized phenomenon right in your own back yard. If your back yard was your organization, at least.

The rise of containers and their quite necessary automated clustering control systems (often referred to as orchestration) has had the unintended consequence of forcing colloquialisms from one side of the state line to another. That’s app dev into IT proper, by the way.

The Curious Case of Container Colloquialisms

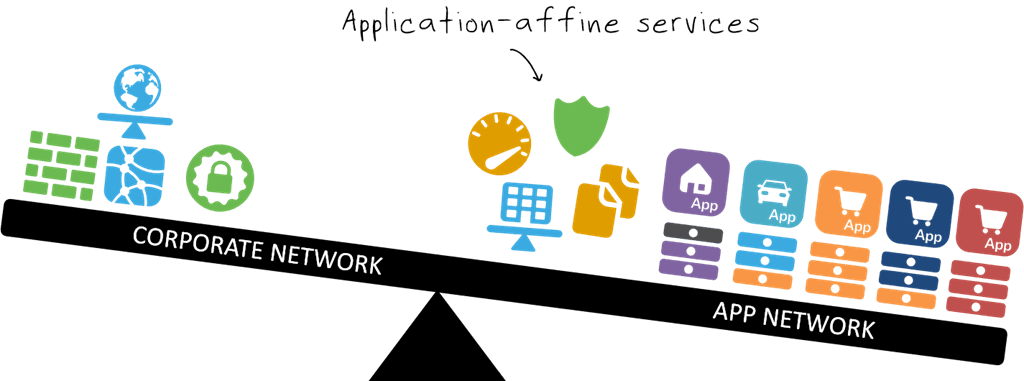

Many of the functions required to achieve the flexibility and reliability of scale required has meant the migration of certain previously production-only services into the container “environment.” These functions are ostensibly “baked in” to that environment now, using lightweight integration (APIs and message queuing) to achieve what heretofore could only be realized from a fully implemented cloud computing environment: auto-scaling, highly available applications.

In doing so, load balancing functions are native to the pods/nodes via small, daemon-like services. While not highly advanced (we’re talking barely above network load balancing capabilities) they do the job they were designed to do. These services can be (and are) pluggable, if you will, enabling other projects (open source and vendor provided) that unlock more advanced capabilities (and one hopes, algorithms).

But in itself, these load balancing functions do not enable the scale and high-availability we’re ultimately looking for (and need, in production environments). Nor are they capable of routing APIs – which require HTTP (L7) smarts. We need that if we’re going to efficiently scale out modern applications backed by microservices and fronted by API façades. We need a more robust solution.

That’s where ingress controllers enter the picture. These are the “load balancers” that dissect and direct ingress traffic based on URIs and HTTP headers to enable application layer routing and scalability.

What’s happened is the developers that created (and subsequently use) ingress controllers have basically recreated what we (in netops) would refer to as traffic management, or application delivery, or content switching/routing. We’ve used a lot of different terms over the years in netops, as have those over in dev(ops). App routing and page routing are also terms developers have used to describe L7 routing. The concept is not unfamiliar to either group. But the terms – the colloquialisms – are.

An ingress controller is tasked with routing requests to the appropriate (virtual) service within the container cluster. That service might be another load balancing proxy or it might be a container system-specific construct. In either case, the role of the ingress controller is to route traffic based on layer 7 (HTTP) values within the HTTP headers of an HTTP request. Usually this is the URI, but it could be the host name, or it could be some other custom value (like a version number or API key).

Once the ingress controller has extracted the value from the header, it uses policies described by resource files to determine how to distribute it. It can distribute equally, or send 75% to one version of the service and 25% to another. It’s pretty flexible that way. The ingress controller further has monitoring (health and status) responsibilities, and needs to be very careful it doesn’t distribute a request to a “dead” service.

Sound familiar, netops? It should, because that’s basically the functions of a smart (L7 capable) proxy (like BIG-IP).

Now that you know how they’re the same, let me assure you there are some differences. Notably, an ingress controller is configured declaratively. That is, its configuration is determined by a description in a resource file outside the controller itself. This is not like traditional smart proxies that control and direct ingress traffic. Traditional smart proxies are authoritative sources of the environment. An ingress controller is not. It looks elsewhere for that, to files that act as a sort of “abstraction layer” that allows flexibility in implementations. That means it (or a complementary component) has to read it, interpret it, and create the configuration appropriate to that description. And it has to keep it up-to-date. While the variability at the ingress control of a containerized environment is less than that deeper in the system, it still changes and has to be watched for.

When all is said and done, the ingress controller is responsible for application layer routing of requests from the outside to the appropriate resource inside the containerized environment. Which is pretty much the definition of a smart load balancer.

The name may have changed, but the functions remain very much the same.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.