Everyone is rushing into the gold mine that is generative AI. At least that’s the way it seems on the surface. Barely a press release hits my inbox these days that doesn’t mention generative AI in some way.

But dig deeper and you quickly realize that the biggest impact generative AI is having right now is on marketing.

What we’re seeing right now is folks grabbing at low-hanging fruit. Any capability that’s easy to “bolt on” without significant development or research is being slapped on with super glue and shipped out the door.

Most companies—whether technology or manufacturing or healthcare—are taking a more measured approach. A more strategic approach, if I may say so. And since this is my blog, I can.

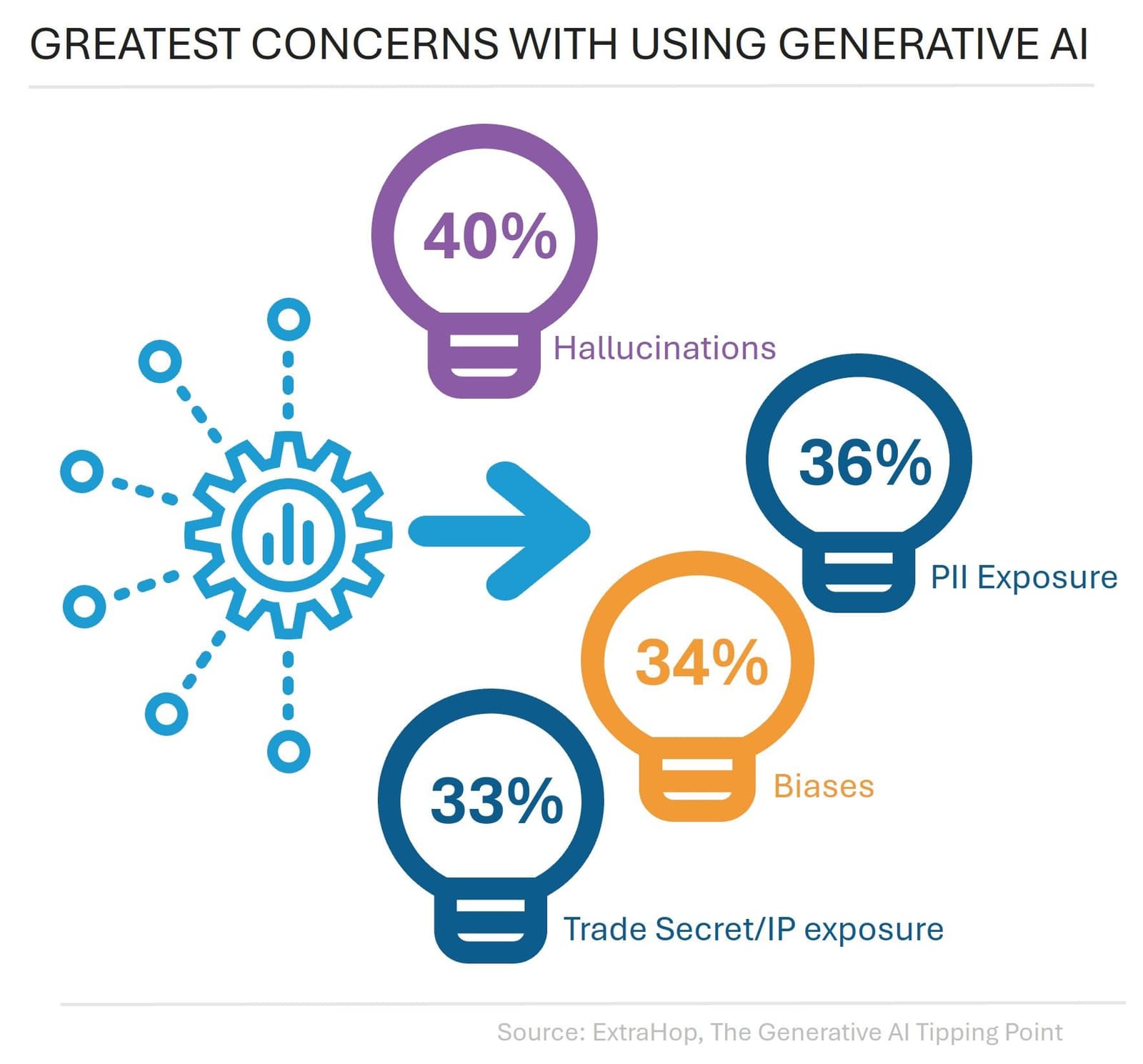

The reasons for being more critical of how you use generative AI are well known, if we judge awareness by a recent ExtraHop survey. The greatest concerns fall into two camps: reliability and security.

In the domain of generative AI, reliability is all about accuracy and trust. I must be able to trust that the responses I receive—whether code, config, or content—are accurate and correct, and not full of biases.

In the security demesne, we have the same old concerns we’ve always had—and more. Now it’s not just exposure of customer and employee PII, but it’s also about trade secrets and intellectual property leaking out into the wide, wide world.

Solving for Generative AI Risks

Solving challenges with reliability takes a lot of work because they either require (a) a lot of training and fine-tuning or (b) an architectural approach in which generative AI is only part of the solution and leverages advanced prompt engineering techniques to validate its own responses. Both require time and money, and that’s why we don’t see generative AI solutions shaping markets. Yet. That will come, but it will take time.

The security challenge is both easier and more difficult to address. Solving the challenge of exposing trade secrets or intellectual property means either (a) deploying and operating your own instances of an LLM—and all that entails—or (b) architecting around the problem. This means developing GPT agents that use the generative capabilities of an LLM as a tool but not as the primary source.

For example, it’s easy to forget that data you collect may be of strategic importance. The model I use to track market activities may seem innocuous but exposes a lot of how F5 thinks about the market and plans to compete in that market. It has strategic significance. Thus, this isn’t something you want to hand off to a public LLM for analysis. Add to that the reality that generative AI is horrible—and I do mean horrible—at analyzing tabular data, and this seems like a bad use case.

But by leveraging OpenAI functions to take advantage of Python’s data analysis capabilities, it isn’t a bad idea at all. This takes time and development effort because you need to build a GPT agent instead of simply handing the data to OpenAI for analysis, but it solves for both the challenge of reliability and security.

In the case of accidental exposure of customer or employee PII, we are already seeing an easier solution, that of data masking.

Data Masking for Generative AI

Now, data masking was already on the rise for use in development as it allows developers to test with real data without the risk of exposing sensitive data. But it’s equally applicable for use with generative AI as a way to prevent exposure. There are a number of open source libraries and tools already available that make it easy to integrate because, well, most generative AI is API-driven and APIs are easy to intercept and inspect requests for sensitive data.

Using data masking to solve for generative AI security concerns works in both development and production, ensuring that sensitive data is not exposed throughout the application life cycle.

It’s certainly the case that data masking was on the rise before generative AI showed up and took the stage from every other technology. But generative AI is likely to prove a catalyst for data masking capabilities within the application delivery and security domain as well as within app development.

See how F5 solutions power and protect AI-based apps ›

About the Author

Related Blog Posts

Multicloud chaos ends at the Equinix Edge with F5 Distributed Cloud CE

Simplify multicloud security with Equinix and F5 Distributed Cloud CE. Centralize your perimeter, reduce costs, and enhance performance with edge-driven WAAP.

At the Intersection of Operational Data and Generative AI

Help your organization understand the impact of generative AI (GenAI) on its operational data practices, and learn how to better align GenAI technology adoption timelines with existing budgets, practices, and cultures.

Using AI for IT Automation Security

Learn how artificial intelligence and machine learning aid in mitigating cybersecurity threats to your IT automation processes.

Most Exciting Tech Trend in 2022: IT/OT Convergence

The line between operation and digital systems continues to blur as homes and businesses increase their reliance on connected devices, accelerating the convergence of IT and OT. While this trend of integration brings excitement, it also presents its own challenges and concerns to be considered.

Adaptive Applications are Data-Driven

There's a big difference between knowing something's wrong and knowing what to do about it. Only after monitoring the right elements can we discern the health of a user experience, deriving from the analysis of those measurements the relationships and patterns that can be inferred. Ultimately, the automation that will give rise to truly adaptive applications is based on measurements and our understanding of them.

Inserting App Services into Shifting App Architectures

Application architectures have evolved several times since the early days of computing, and it is no longer optimal to rely solely on a single, known data path to insert application services. Furthermore, because many of the emerging data paths are not as suitable for a proxy-based platform, we must look to the other potential points of insertion possible to scale and secure modern applications.