The need for scalability isn’t even a question these days. The answer is always yes, you need it. Business growth depends on operational growth of the applications that are, after all, part and parcel of today’s business models – no matter what the industry. So it is that systems are generally deployed with the things necessary to achieve that scale when – not if – it will be needed. That usually means a proxy. And the proxies responsible for scale (usually a load balancer) are, therefore, pretty critical components in any modern architecture.

It’s not just that these proxies serve as the mechanism for scaling; it’s that they’re increasingly enjoined to do so automagically through the wonders of auto-scale. That simple, hyphenated word implies a lot; such as the ability to instruct, programmatically, a system to not only launch additional capacity but also make sure the proxy responsible for scalability knows of its existence and is able to direct requests to it.

One assumes any proxy worth its salt has the programmatic interfaces required. The API economy isn’t just about sharing between apps and people, after all, it’s also about sharing between systems – on-premises and off, in the cloud. But what we often fail to recognize is that the task of adding a new resource to a load balancing proxy takes place on the management or control plane, not on the data plane.

That makes sense. We don’t want to be interfering with the running of the system while we’re gathering statistics or manipulating its configuration. That’s a no-no, especially in a situation where we’re trying to add capacity because the system is running full boar, delivering apps to eager users.

But what happens when the reverse occurs? When the running of the system interferes with the ability to manage the system?

Your auto-scaling (or manual scaling, for that matter) is going to fail. Which means app capacity isn’t going to increase, and the business is going to suffer.

That’s bad, as if you needed me to tell you that.

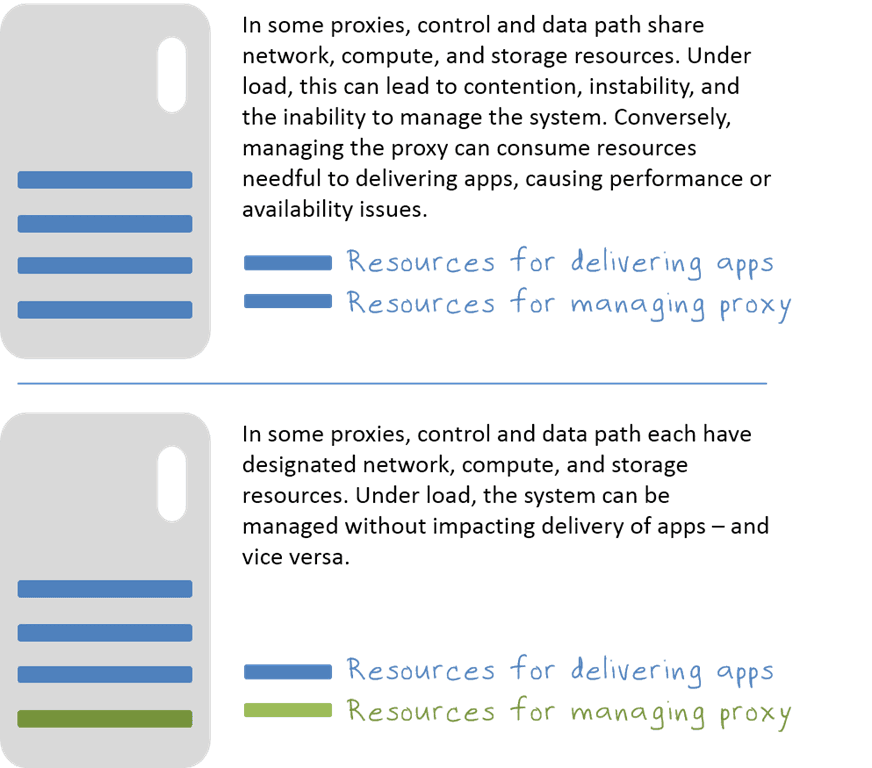

The reason this happens is because many proxies (which were not built with this paradox in mind) share system resources for both control and data planes. There is no isolation between them. The same network that delivers the apps is used to manage the proxy. The same RAM and compute assigned to deliver apps is assigned to manage the proxy. Under load, only one of these two gets the resources. If you’re trying to add app resources to the proxy in order to scale and you can’t access the system to do it, you’re pretty much in trouble.

That’s why it’s important to evaluate your choice of proxies with an eye toward manageability. Not just its ease of manageability. Not just its API and scripting capabilities, but its manageability under load. Proxies that have been specifically designed for massive scale should have a separate set of resources designated as “management” resources to ensure that no matter how loaded the data plane is with delivering apps, it can still be managed and monitored.

In the network world we call this out of band management, because it occurs outside the data path – the primary path; the critical path.

The ability to manage a proxy under load, out of band, is important in the overall ability to scale – automagically or manually – the apps and, through them, the business.

About the Author

Related Blog Posts

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.

Phishing Attacks Soar 220% During COVID-19 Peak as Cybercriminal Opportunism Intensifies

David Warburton, author of the F5 Labs 2020 Phishing and Fraud Report, describes how fraudsters are adapting to the pandemic and maps out the trends ahead in this video, with summary comments.