As the worlds of DevOps and NetOps collide and container environments subsume definitions traditionally used in the network, it seems prudent to explore the use of the often-confusing term "ingress" in terms of the data path and container environments.

Ingress and egress as terms have classically been used to describe the direction of traffic on the network from the perspective of the data center. Ingress is inbound, egress is outbound.

As container environments have matured, the term ingress has been applied to have a very specific, application focused definition.

Ingress. An API object that manages external access to the services in a cluster, typically HTTP. Ingress can provide load balancing, SSL termination and name-based virtual hosting.

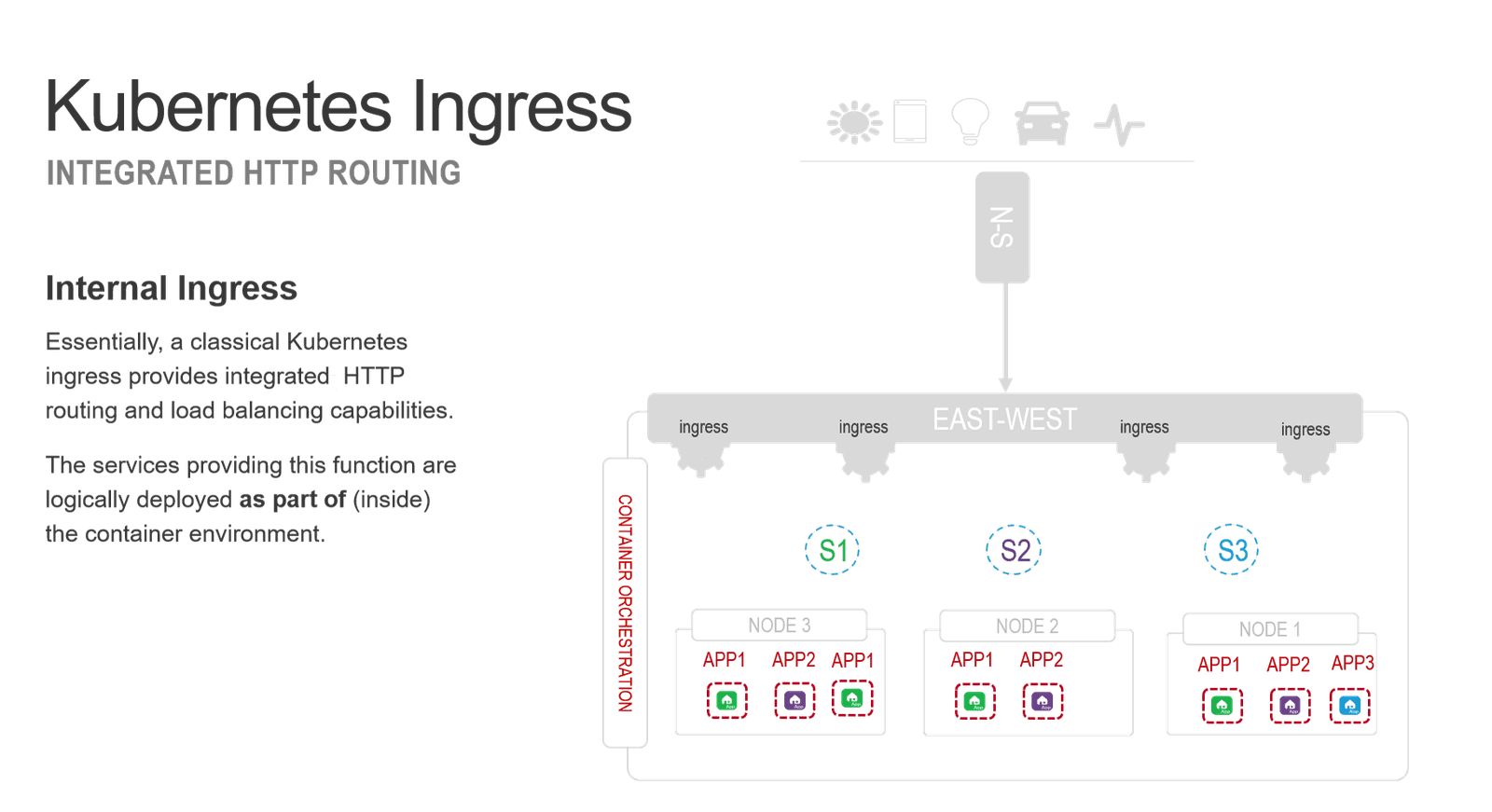

It is important to pause and note that an ingress resource as defined in a Kubernetes environment describes capabilities assumed to execute within the container perimeter itself.

Each ingress is a reverse proxy that accepts external requests and, based on the rules specified by the Kubernetes ingress resource, directs those requests to the correct Kubernetes service. The service in turn load balances requests across a set of associated containers, usually by way of native layer 4 (TCP) load balancing algorithms. This is one of the ways a unified API is presented to the outside world. The ingress parses the API calls (the URI path) and distributes them to the appropriate container-hosted microservices inside the container cluster.

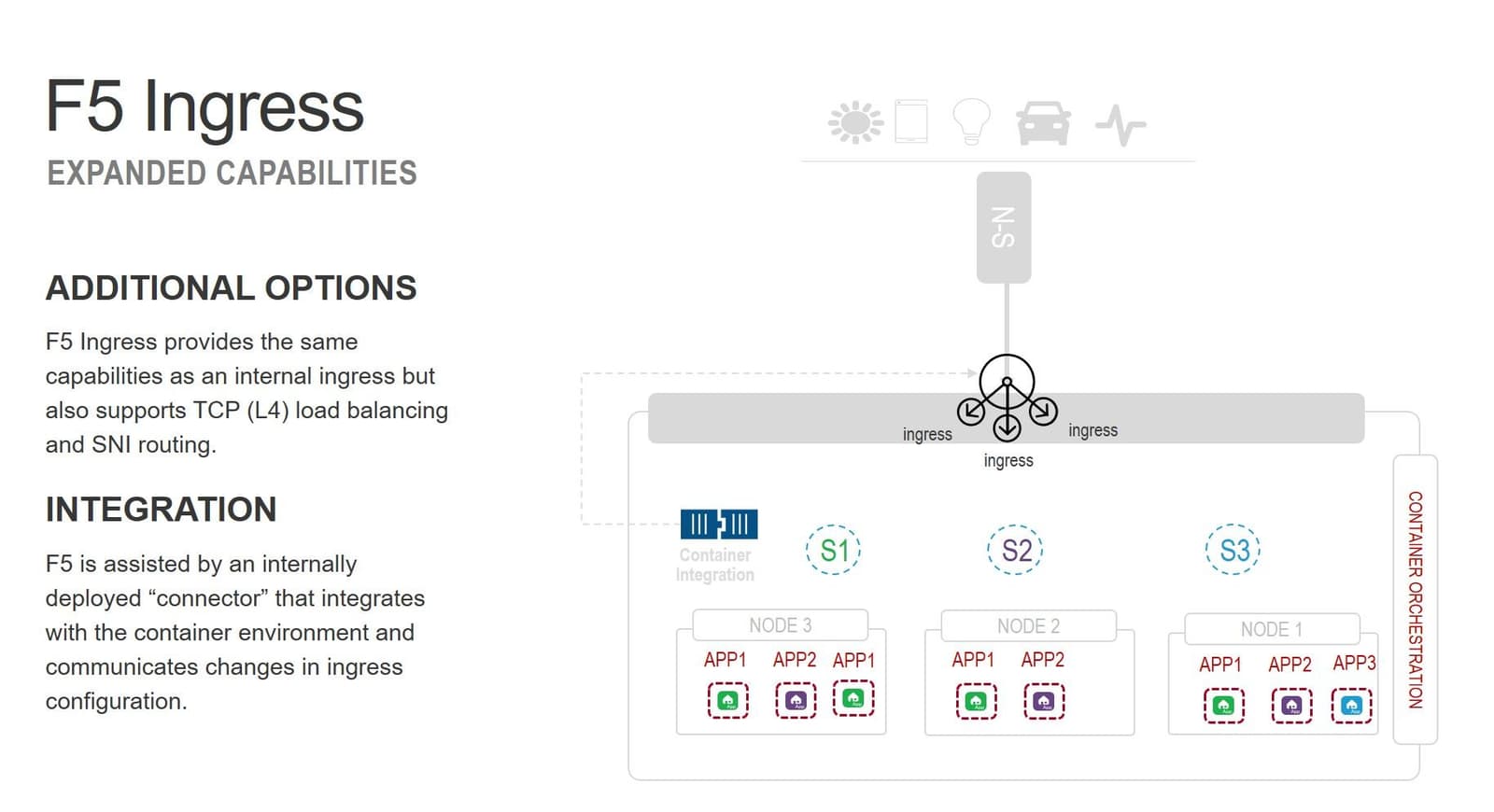

F5 provides the same capabilities as a classical Kubernetes ingress but adds additional capabilities in the form of SNI routing and layer 4 (TCP) load balancing. The ability to perform SNI (server name indicator) routing is a benefit for those desiring end-to-end TLS encryption of message exchanges, as it enables F5 to properly route requests based on information in the headers without decrypting the actual payload/message. While this restricts the range of functionality that can be applied to the request – for example, it cannot be scanned for malicious content – it provides the necessary support for architectures in which content must remain encrypted for regulatory or operational reasons. Layer 4 (TCP) load balancing is often used external to a container environment to scale Kubernetes style ingress services.

F5 is typically deployed external to the container environment. It's often used as a load balancing solution to expose clusters externally, i.e. provide public access to services comprised of a container cluster. A CNCF survey found that 67% of respondents choose a load balancer option to expose cluster services externally, with another 33% leveraging ingress (L7) capabilities.

In order for us to provide the same capabilities as a Kubernetes ingress, a container-native "connector" is used to facilitate updates to the policies that define traffic policies. This connector resides inside and integrates with the container orchestrator (typically Kubernetes but Red Hat OpenShift is also popular). Communication with F5 ingress is accomplished via API. Updates include changes to ingress resource definitions (HTTP routing policies) as well as changes to configuration such as the launch or removal of a container instance that impacts a current service definition.

The advantage of using F5 over simple ingress services is the ability to apply advanced capabilities to inbound and outbound traffic. Security, header enrichment, and client-specific performance optimizations can be applied when using F5 without modifying the container environment or architecture or the application itself.

Additional Resources:

- Read the Docs: F5 Container Ingress Services

- Download from the Docker Store: F5 Container Ingress Services

About the Author

Related Blog Posts

Multicloud chaos ends at the Equinix Edge with F5 Distributed Cloud CE

Simplify multicloud security with Equinix and F5 Distributed Cloud CE. Centralize your perimeter, reduce costs, and enhance performance with edge-driven WAAP.

At the Intersection of Operational Data and Generative AI

Help your organization understand the impact of generative AI (GenAI) on its operational data practices, and learn how to better align GenAI technology adoption timelines with existing budgets, practices, and cultures.

Using AI for IT Automation Security

Learn how artificial intelligence and machine learning aid in mitigating cybersecurity threats to your IT automation processes.

Most Exciting Tech Trend in 2022: IT/OT Convergence

The line between operation and digital systems continues to blur as homes and businesses increase their reliance on connected devices, accelerating the convergence of IT and OT. While this trend of integration brings excitement, it also presents its own challenges and concerns to be considered.

Adaptive Applications are Data-Driven

There's a big difference between knowing something's wrong and knowing what to do about it. Only after monitoring the right elements can we discern the health of a user experience, deriving from the analysis of those measurements the relationships and patterns that can be inferred. Ultimately, the automation that will give rise to truly adaptive applications is based on measurements and our understanding of them.

Inserting App Services into Shifting App Architectures

Application architectures have evolved several times since the early days of computing, and it is no longer optimal to rely solely on a single, known data path to insert application services. Furthermore, because many of the emerging data paths are not as suitable for a proxy-based platform, we must look to the other potential points of insertion possible to scale and secure modern applications.