Generative AI (GenAI) chatter is coming from everywhere. The question is what’s catching on? How is it making the world a better place? Where is the business value? These questions are equally relevant when you consider the challenges facing organizations that are figuring out if, and when, to implement GenAI into their operations (AIOps). Based on my past year of experiments with generative AI and broad exposure to industry trends during my daily research at F5, I offer the following five takeaways to help guide organizations that are seeking to understand the impact of GenAI on operational data practices. As a result, these organizations will be better positioned to align GenAI technology adoption timelines with their existing budgets, practices, and cultures.

1. GenAI models love semi-structured and unstructured data

Operational data is a hodgepodge of semi-structured data (objects) and unstructured data sets. Large language models (LLMs) are quite flexible and effective with this range of data formats. This makes LLMs a perfect technology to employ for analyzing operational data sets. Organizations can conduct a range of experiments and evaluations in-house to verify the efficacy, ease of use, and cost of various GenAI enabled solutions. Using LLM inference to detect interesting data patterns with fewer false positives puts the speed and scale of machines in line with the goals of teams consuming operational data flows.

2. Organizations don’t need to build models

Organizations that focus on knowing which techniques are used by which models for their specific tasks at hand don’t have to build their own models. For example, named entity recognition (NER) is a branch of natural language processing (NLP) that is proving to be an effective technique for establishing key elements within semi-structured data. An example of NER could be a list that comprises a category such as days of the week or a description such as whole numbers greater than 1 and less than 5. The result is greater accuracy during inference than rule-based pattern-matching techniques that are not GenAI-enabled. As research and the practice of using techniques like NER continue to advance, operations teams can focus their attention on leveraging the techniques that have proved successful, rather than building models.

NER example:

Named Entity: Days of the week

List: Sunday, Monday, Tuesday, Wednesday, Thursday, Friday, Saturday

Figure 1. Named entity recognition provides greater accuracy during inference than rule-based pattern matching.

3. Data gravity is real

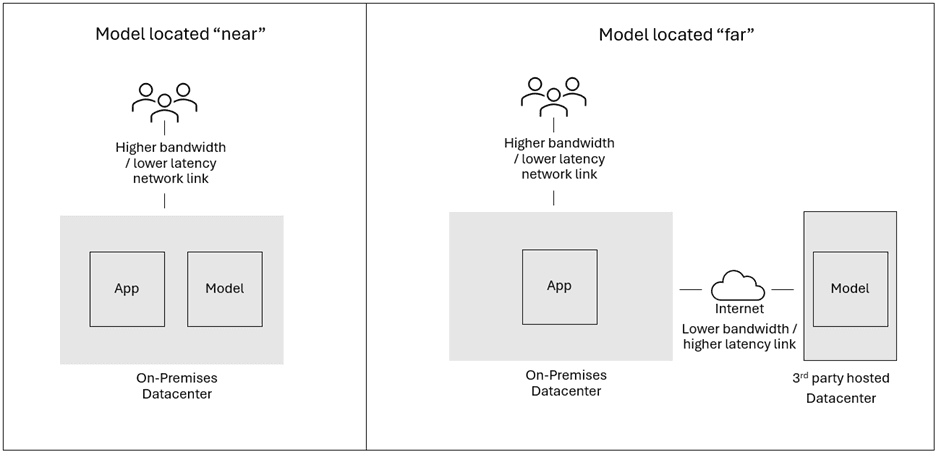

Data gravity is an underlying force that influences decisions about whether to place compute nearer to where data is created or to move data closer to where compute is already deployed. The greater the volume of data, the stronger the gravitational force, resulting in increased compute capacity placed nearer to it. For training (creating and tuning models), data is aggregated and moved closer to compute. For inferencing (using models), the model is moved closer to where the prompt is issued.

If a model is accessed by bringing a copy in-house—versus calling the API of an instance hosted by a third party—it makes sense to move the model closer to the prompt, and/or any additional private data set vectorized as part of the prompt. On the other hand, if the model is hosted by athird party exposing their API over the internet, then the model and the inference operations are not moving at all. In these cases, inference and private data vectors may be moved to a “network near” location using a data center colocation interconnect or by attempting to match hosting locations with the model provider, if possible.

Awareness of the forces that pull data and compute together as well as those that force them apart help lead to informed choices in the pursuit of finding the right balance between cost and performance.

4. Don’t ignore data silos, deal with them

With GenAI processing, it’s more important than ever to break down data silos to simplify and speed up operational data analysis. However, for the foreseeable future, it appears that data silos will remain, if not proliferate.

The question is more about how to deal with data silos and what technology choices to make. In terms of accessing data stored in multiple locations, the choices are to copy and move the data or to implement a logical data layer that uses federated queries without moving the data. Regardless of which choice is made, recognizing the streaming data sources that exist and evaluating operational use cases for time/data freshness constraints will help you select the necessary elements of your data technology stack, such as streaming engines, query engines, data formats, and catalogs. Technology choice gives data teams the power to choose the most effective and easy-to-use technologies while balancing performance and cost. Ideally, an organization’s data practice matures with time while always giving the organization the flexibility to choose what works best for it at a given stage of maturity.

5. Automation is a friend—don’t fear it

When solutions add automation, they scale by turning tacit knowledge of experts in data privacy and SecOps into a repeatable AIOps-enabled practice that can be executed by machines. Only then are data, security, and privacy teams freed up to add intelligence. Intelligence enhances the efficacy of policies by more finely defining how specific data can be used by whom, for how long, and for what purpose—all while tracking where the data lives, which copies are being made, and with whom it is being shared. This frees up time for strategic planning, new technology evaluation, and communicating with the business to refine data access policies and approve exceptions.

Speed, scale, and automation are characteristics of a mature AIOps practice, leading to better output, faster decisions, and optimized human capital. GenAI is opening doors that technology has been unable to open…until now. The five learnings above provide some trail markers for IT operations, security operations, and privacy operations to consider as these teams implement GenAI into their AIOps. AI models, proximity of compute to operational data, data, and automation provide key pieces of the new AIOps platform. Within this rich learning environment, organizations can build the culture and practices of technology operations for the current, and succeeding, generation.

For a deeper dive into generative AI’s impact on data, read the latest Digital Enterprise Maturity Index report from F5.

About the Author

Related Blog Posts

Multicloud chaos ends at the Equinix Edge with F5 Distributed Cloud CE

Simplify multicloud security with Equinix and F5 Distributed Cloud CE. Centralize your perimeter, reduce costs, and enhance performance with edge-driven WAAP.

At the Intersection of Operational Data and Generative AI

Help your organization understand the impact of generative AI (GenAI) on its operational data practices, and learn how to better align GenAI technology adoption timelines with existing budgets, practices, and cultures.

Using AI for IT Automation Security

Learn how artificial intelligence and machine learning aid in mitigating cybersecurity threats to your IT automation processes.

Most Exciting Tech Trend in 2022: IT/OT Convergence

The line between operation and digital systems continues to blur as homes and businesses increase their reliance on connected devices, accelerating the convergence of IT and OT. While this trend of integration brings excitement, it also presents its own challenges and concerns to be considered.

Adaptive Applications are Data-Driven

There's a big difference between knowing something's wrong and knowing what to do about it. Only after monitoring the right elements can we discern the health of a user experience, deriving from the analysis of those measurements the relationships and patterns that can be inferred. Ultimately, the automation that will give rise to truly adaptive applications is based on measurements and our understanding of them.

Inserting App Services into Shifting App Architectures

Application architectures have evolved several times since the early days of computing, and it is no longer optimal to rely solely on a single, known data path to insert application services. Furthermore, because many of the emerging data paths are not as suitable for a proxy-based platform, we must look to the other potential points of insertion possible to scale and secure modern applications.