Data orchestration

Data orchestration automates movement, transformation, and governance of data across complex data and AI pipelines.

Data orchestration automates movement, transformation, and governance of data across complex data and AI pipelines.

Data orchestration refers to the automated coordination, transformation, and movement of data across systems, pipelines, and environments. It ensures that data is collected, standardized, governed, and delivered reliably to analytics, applications, and AI workloads.

Data orchestration oversees the entire data lifecycle, from ingestion and transformation to activation and delivery across data lakes, warehouses, operational systems, and AI pipelines. It automates workflows that replace manual handoffs and siloed scripts, ensuring data remains accurate, timely, and consistently formatted in distributed environments.

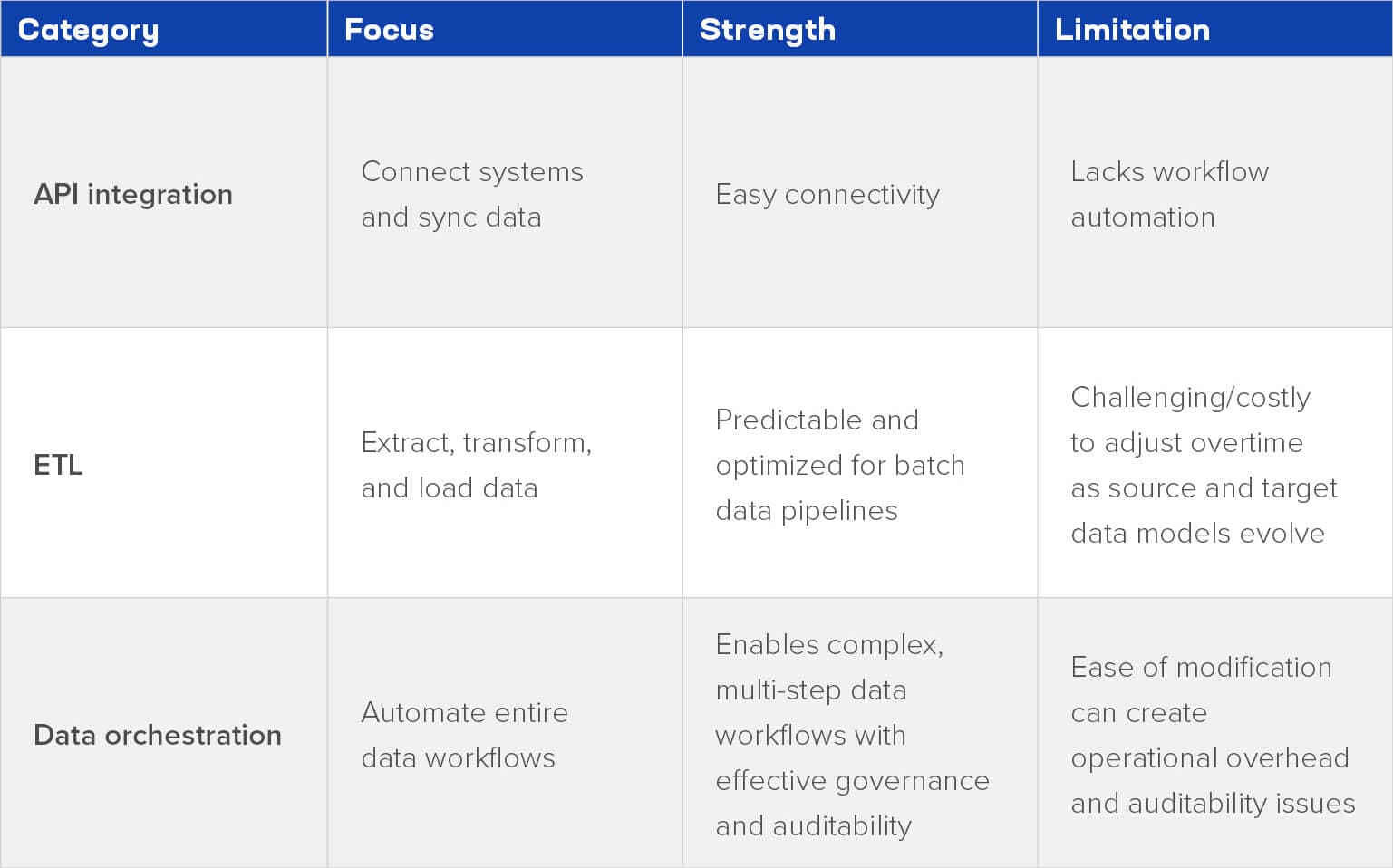

Data integration combines data from multiple sources but typically focuses on connectivity. ETL tools extract, transform, and load data into target systems, often in batch mode.

Data orchestration coordinates all data workflows, including batch, streaming, multicloud, analytics, and AI, ensuring they run in the right order with quality, governance, and monitoring.

As organizations expand into multicloud deployments, real-time analytics, and AI-driven applications, the volume and speed of data exceed the capacity of manual processes. Data orchestration ensures that pipelines continuously deliver high-quality, governed data to downstream systems to process.

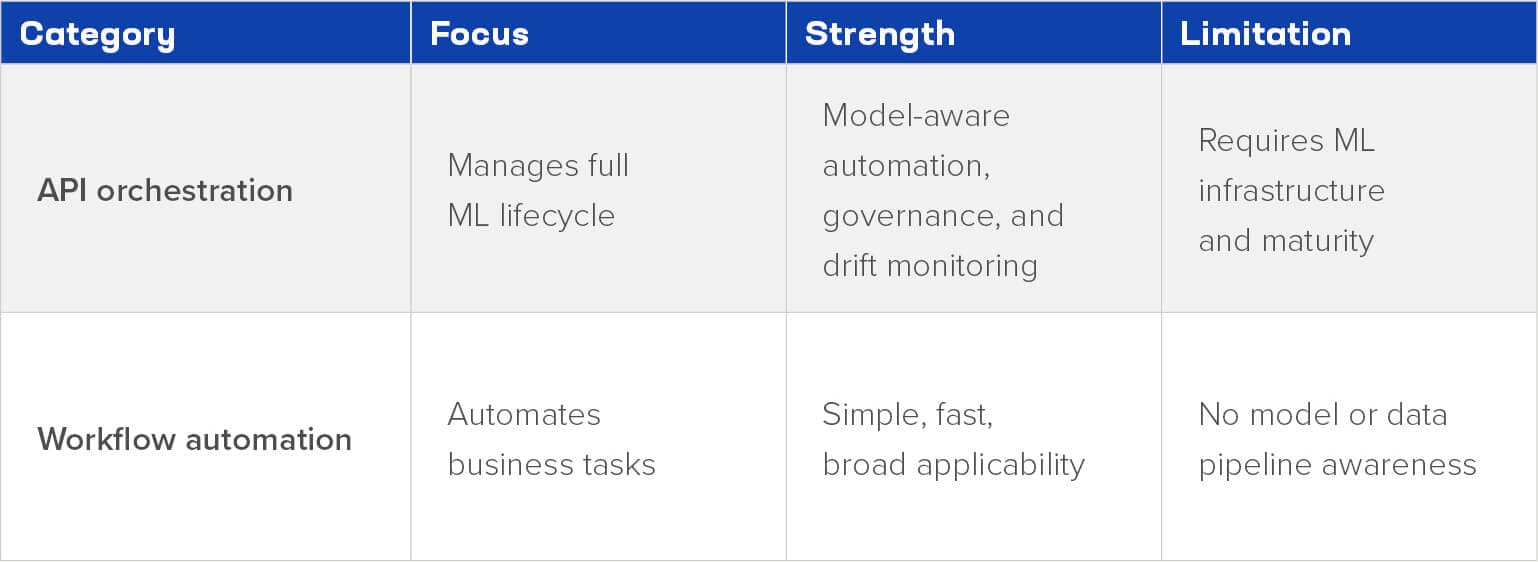

Data orchestration is foundational to data management; it encompasses data workflows, data collection, data cleaning, and moving usable data. AI orchestration expands data orchestration to cover the entire machine learning (ML) lifecycle, including coordinating feature pipelines (specifically structured for predictions), model training (resource scheduling and dataset management), model deployment, monitoring model drift and accuracy, and feedback loops for retraining or tuning.

Data orchestration provides several benefits for enterprises:

Data orchestration typically follows four stages:

1. Ingest and collect data: Connects to databases, SaaS systems, logs, APIs, IoT devices, and streaming platforms.

2. Transform and standardize data: Applies schema validation, cleanup, normalization, enrichment, and quality checks to data.

3. Activate and deliver data: Routes refined data to data lakes, warehouses, BI tools, operational systems, and AI/ML pipelines.

4. Monitor, troubleshoot, and optimize workflows: Tracks performance, lineage, data freshness, and pipeline health.

Key components of a data orchestration system include:

F5 enables organizations to operate orchestrated data and AI pipelines securely, reliably, and at scale by strengthening the underlying traffic, APIs, and observability fabric on which these pipelines depend.

Secure and governed data delivery: AI data pipelines rely on APIs, services, and distributed storage systems. F5 protects these paths with inline inspection, API governance, role-based access control (RBAC), and encrypted traffic management, ensuring sensitive data moves safely and securely across hybrid and multicloud environments.

Optimized, high-performance connectivity: Data orchestration often spans multiple networks, clouds, and applications. The F5 Application Delivery and Security Platform (ADSP) delivers high-bandwidth routing, load balancing, and performance optimization to minimize latency and prevent bottlenecks across pipelines.

AI governance and model-aware protection: F5 AI Guardrails enforces real-time policy controls by monitoring requests, responses, and data flows between applications and AI models. It prevents data leakage, blocks unsafe traffic, and ensures only compliant interactions are permitted.

Unified visibility for data and AI pipelines: F5 AI Assistant delivers cross-environment observability, analyzing logs, lineage, telemetry, and policy data to identify bottlenecks and performance issues across distributed pipelines.

Data orchestration delivers major operational and strategic advantages:

Enterprises apply data orchestration across a wide range of operational and analytical workflows:

Data orchestration strengthens governance by embedding controls directly into the data lifecycle:

AI orchestration involves the oversight, management, and automation of all elements used in developing, deploying, and maintaining ML and AI systems. It extends data orchestration across the whole AI lifecycle by handling feature pipelines, training processes, model packaging, deployment, monitoring, and improvement.

AI orchestration covers ML operations (MLOps) workflows, model governance, operational observability, infrastructure readiness, and policy enforcement across hybrid and multicloud environments.

AI orchestration systems standardize and automate each stage of the ML lifecycle:

AI orchestration simplifies and strengthens enterprise AI operations:

AI orchestration platforms are purpose-built for ML and GenAI; they understand model lifecycle requirements, provide model-specific monitoring, support distributed training, and enforce security and compliance for AI.

Capabilities typically include:

Generic workflow automation, on the other hand, encompasses traditional workflow engines (e.g., Business Process Management, schedulers, etc.) that automate business processes but lack ML capabilities. They do not track training, monitor model drift, manage GPU infrastructure, or enforce model-specific governance.

Enterprises use AI orchestration to scale AI programs beyond proof-of-concept:

AI orchestration embeds governance directly into model operations, ensuring enterprise AI remains safe, accountable, and compliant.

What is data orchestration, and how does it work, step by step?

Data orchestration automates the movement, transformation, and delivery of data across distributed systems. It typically follows four steps:

How is data orchestration different from data integration and ETL tools?

Data integration connects systems; ETL tools extract, transform, and load data, often in batches. Data orchestration manages these workflows across batch, streaming, cloud, and AI environments, ensuring reliable pipelines with proper governance, quality checks, and monitoring.

What are the key components of a data orchestration system?

A typical orchestration platform includes:

What are the main benefits and use cases of data orchestration for businesses?

Benefits include greater efficiency, faster insights, improved reliability, and scalability across hybrid environments. Use cases include real-time dashboards, customer systems, fraud detection, supply chain analytics, regulatory reporting, and AI/ML training pipelines.

What is AI orchestration, and how does it relate to data orchestration and MLOps?

AI orchestration manages the entire machine learning lifecycle, including data prep, training, deployment, monitoring, and improvement, by coordinating components that convert data into models and models into production. It works with MLOps to enhance governance, automation, and lifecycle tracking.

What are common tools and platforms for data and AI orchestration?

Standard data orchestration tools include Apache Airflow, Dagster, Prefect, and cloud-native schedulers. AI orchestration platforms include MLflow, Kubeflow, Vertex AI Pipelines, SageMaker Pipelines, Databricks Workflows, and specialized MLOps solutions. Most enterprises use a combination that aligns with their data stack, cloud provider, and/or model strategy.

What are the biggest challenges in implementing AI orchestration, and how can teams address them?

Challenges include fragmented data, inconsistent training, lack of lineage visibility, manual deployment, model drift and bias, and compliance issues. Teams can address these by adopting standardized pipelines, implementing lineage and observability tools, automating deployment, enforcing governance policies, and monitoring throughout the lifecycle.

How do data and AI orchestration support governance and compliance?

They embed governance into workflows by tracking data lineage, access controls, data transformations, audit trails, and validation of model data use. In AI orchestration, this includes tracking model versions, data, decisions, and performance to support transparency and compliance.

Where should enterprises start if they are new to data and AI orchestration?

Organizations should start with a few high-impact pipelines, like key features or analytics workflows, then expand. Establishing centralized metadata, lineage, and quality controls early reduces complexity. Teams should also assess orchestration tools that align with their cloud strategy and adopt scalable governance frameworks for data and AI workloads.

Data and AI orchestration are vital for modern strategies, ensuring reliable, automated pipelines as enterprises expand real-time analytics, deploy larger models, and use multicloud environments. It guarantees proper data flow, high-quality inputs, and consistent AI behavior. Success depends on strong infrastructure, including data networks, security, and delivery tech. Without resilient traffic, secure endpoints, and visibility, even advanced platforms struggle under load.

Orchestration relies on a solid foundation. Resilient traffic management enables scalable data and model services. API security ensures governance and protects sensitive data. Unified observability offers cross-environment insights into performance, drift, errors, and behavior, helping teams operate pipelines confidently.

Enterprises should ask: Are pipelines reliable under load? Is governance consistent? Can issues be traced across clouds? Are networking, security, and delivery teams aligned with data and AI? If answers are “no” or “not sure,” consider rethinking orchestration and infrastructure as a unified system.

Learn more at f5.com.