Consumer Things. Business Things. Manufacturing Things.

Phones. Tablets. Phablets. Laptops. Desktops.

Compute intense. Network intense. Storage intense.

Home. Work. Restaurant. Car. Park. Hotel.

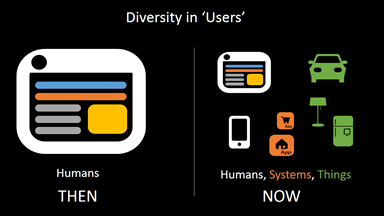

The landscape is shifting on both sides of the business – apps and clients. The term “user” no longer means just a human being. It includes systems and things, as well, that are driven automatically to connect, share, and interact with applications across the data center.

Consider, for example, the emerging microservices architectural trend, which breaks up monolithic applications into its composite parts. Each part is its own service and presents an API (interface) through which other parts (services) and ‘users’ can communicate.

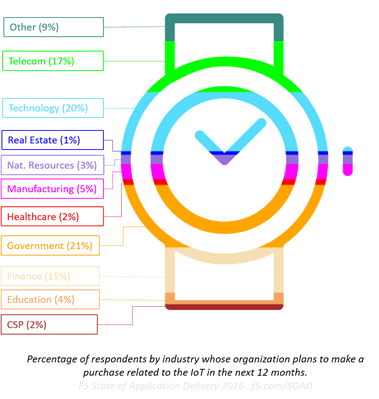

Not one of the 36% dabbling in microservices? (Typesafe, 2015) Sticking with well-understood app architectures won’t insulate you from the impact of the increasing diversity of ‘users’, especially if you’re diving in to the Internet of Things. Our data says some of you are, with 22% of all respondents believing it will be of strategic importance for the next 2-5 years and 15% getting a head start with plans to purchase technology to support IoT in the next 12 months.

That means “things” will have to viewed like “users”, having their own unique set of needs and requirements with respect to security and performance, not to mention availability.

That means that network and application services tasked with delivering an increasingly diverse set of applications to a growing set of clients in even more locations need to be able to differentiate between a human user and a thing user. To optimize performance and ensure security, it is imperative that the services responsible for performance and security are able to apply the right policy at the right time given the right now set of variables.

That means they need to manage traffic (data and communications, in app terms) within the context of the entire transaction: the user, the app, and the purpose for which such communication is being attempted.

You can think of context much in the same way you might have been taught (if you’re old enough, and no, you don’t have to admit it if you’d rather not) about the five “Ws” you need to ask when you’re doing basic information gathering: who, what, where, when, and why. By interrogating traffic and extracting an answer to each of these questions, you can piece together enough context to be able to make an appropriate decision regarding how to treat the exchange. Deny it. Allow it. Scan it. Scrub it. Optimize it. Route it. These are the types of things app services do “in the network” and they do it better and with greater effect if they do it within the context of the exchange.

- Who

Identifies the user. Is it a human being? If so, corporate or consumer? Is it a thing, or another app? If so, what thing and what app? While my smart light bulb might have access to a data reporting app, it doesn’t have access to the accounting systems. - What

What are they doing? Sending data? Asking for data? Searching? HTTP contains a wealth of data that can help determine this without digging very deep. Is it an HTTP POST or a GET? An UPDATE or DELETE? Just the status alone can give us clues as to the purpose of this exchange. - Where

From where are they doing it? Are they at home? At headquarters? Across the street at Starbucks or half way around the world? On a local network or over the Internet? Geolocation is a popular means of restricting access to apps, resources, and data. When delivering apps to users, regardless of their type, location matters, particularly if there are corporate policies against access certain types of data or apps from public places like the airport or the local Starbucks. - When

What time of day is it? And more importantly, what time of day is it here as well as where the user is located? Are they trying to access a system at 3am from a location where it’s 8am? For example, we know from research that bot traffic has been seen to increase significantly after hours, between 6pm and 9pm. And we might put this data together with “who” and be able to instantly determine that Alice should be working at 3am when she works at corporate headquarters. - Why

Why is like the non-existent layer 8. It can only be inferred from what, and requires that you are able to piece together clues from what is otherwise fairly basic data, like the URI (assuming it’s HTTP-based which, these days, is a fair assumption). Once we know what they’re doing, like sending data, we then may want to know why they’re sending it. Is it a profile update or are they sending sensor data? Are they doing a search or submitting an order?

These are what make up context. It’s not necessarily the case that you need to collect all five (this isn’t Pokémon, after all) to be able to make a decision regarding the proper course of action to take. But you do need to have visibility (access) to all five, in case you do. That’s why visibility into the entire network stack – from layers 2 through 7 – are so important to app services. Because each one may need to evaluate a request or response within the context it was made, and only by having visibility into the full stack ensures you can reach in and grab that information when it’s needed.

That’s one of the things that an intelligent proxy brings to the table; the visibility necessary to ensure that network, security, and infrastructure architects (and engineers) can implement policies that require context to ensure the security, speed, and reliability every user – no matter whether human, sensor, or software – ultimate needs.

About the Author

Related Blog Posts

Why sub-optimal application delivery architecture costs more than you think

Discover the hidden performance, security, and operational costs of sub‑optimal application delivery—and how modern architectures address them.

Keyfactor + F5: Integrating digital trust in the F5 platform

By integrating digital trust solutions into F5 ADSP, Keyfactor and F5 redefine how organizations protect and deliver digital services at enterprise scale.

Architecting for AI: Secure, scalable, multicloud

Operationalize AI-era multicloud with F5 and Equinix. Explore scalable solutions for secure data flows, uniform policies, and governance across dynamic cloud environments.

Nutanix and F5 expand successful partnership to Kubernetes

Nutanix and F5 have a shared vision of simplifying IT management. The two are joining forces for a Kubernetes service that is backed by F5 NGINX Plus.

AppViewX + F5: Automating and orchestrating app delivery

As an F5 ADSP Select partner, AppViewX works with F5 to deliver a centralized orchestration solution to manage app services across distributed environments.

F5 NGINX Gateway Fabric is a certified solution for Red Hat OpenShift

F5 collaborates with Red Hat to deliver a solution that combines the high-performance app delivery of F5 NGINX with Red Hat OpenShift’s enterprise Kubernetes capabilities.